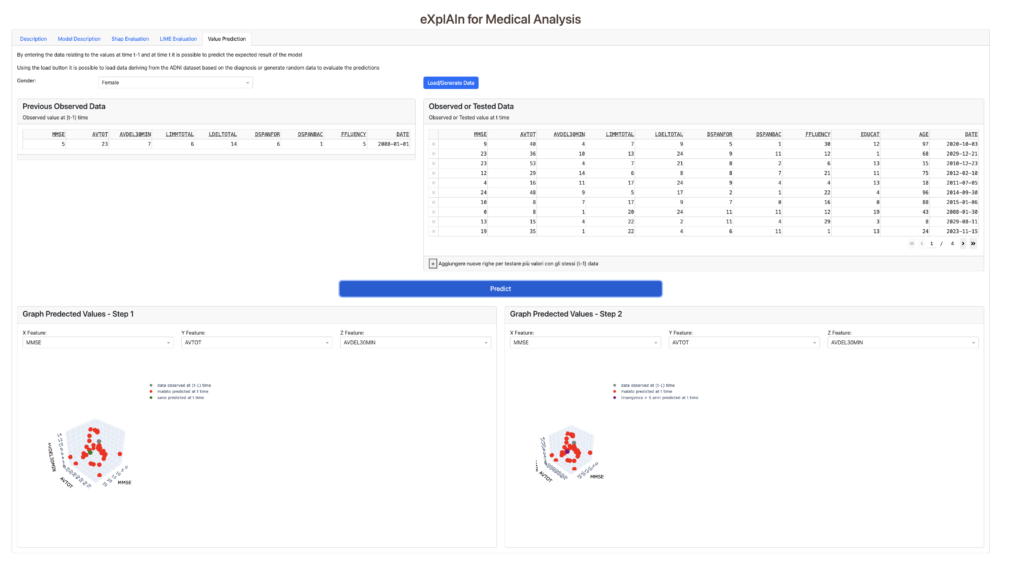

ExplAIn Medical Analysis (EMA) is an artificial intelligence system aimed at providing clear and accessible guidance for early diagnosis and monitoring of Alzheimer’s disease progression. The software is based on explainable machine learning techniques and was developed through interdisciplinary collaboration involving the CTNLab at the Institute of Cognitive Sciences and Technologies of CNR, the Institute of Biomedical Technologies of CNR, IRCCS Fondazione Santa Lucia in Rome, Policlinico Umberto I in Rome, the University of Pavia, and Sapienza University of Rome.

A software based on explainable machine learning techniques is one that employs algorithms and models designed to provide transparent and interpretable insights into how decisions are made. In traditional machine learning approaches, such as deep learning neural networks, decision-making processes can be opaque and difficult to interpret, often described as “black box” models.

Explainable machine learning techniques aim to address this issue by offering explanations or justifications for the decisions made by the model. These explanations help users, such as clinicians or end-users, understand why a certain prediction or recommendation was made. It enhances trust in the model outputs and enables users to identify potential biases, errors, or limitations in the data or model architecture.

References

D’Amore, F. M., Moscatelli, M., Malvaso, A., D’Antonio, F., Rodini, M., Panigutti, M., … & Caligiore, D. (2024). Explainable machine learning on clinical features to predict and differentiate Alzheimer’s progression by sex: Toward a clinician-tailored web interface. Journal of the Neurological Sciences, 123361.

D’Amore, F.M., Mirino, P., D’Antonio, F., Malvaso, A., D’Addario, S.L. Catalano, M., Rodini, M., Panigutti, M., Carlesimo, G. A., Guariglia, C., Caligiore, D. Explainable machine learning to predict and differentiate Alzheimer’s progression by gender. Poster presentato al XVIII Convegno Nazionale della Società Italiana di Neurologia (SINDem). Firenze, 23-25 Novembre 2023.